Text with Diacritics

Tone-to-text applications and Text-mining

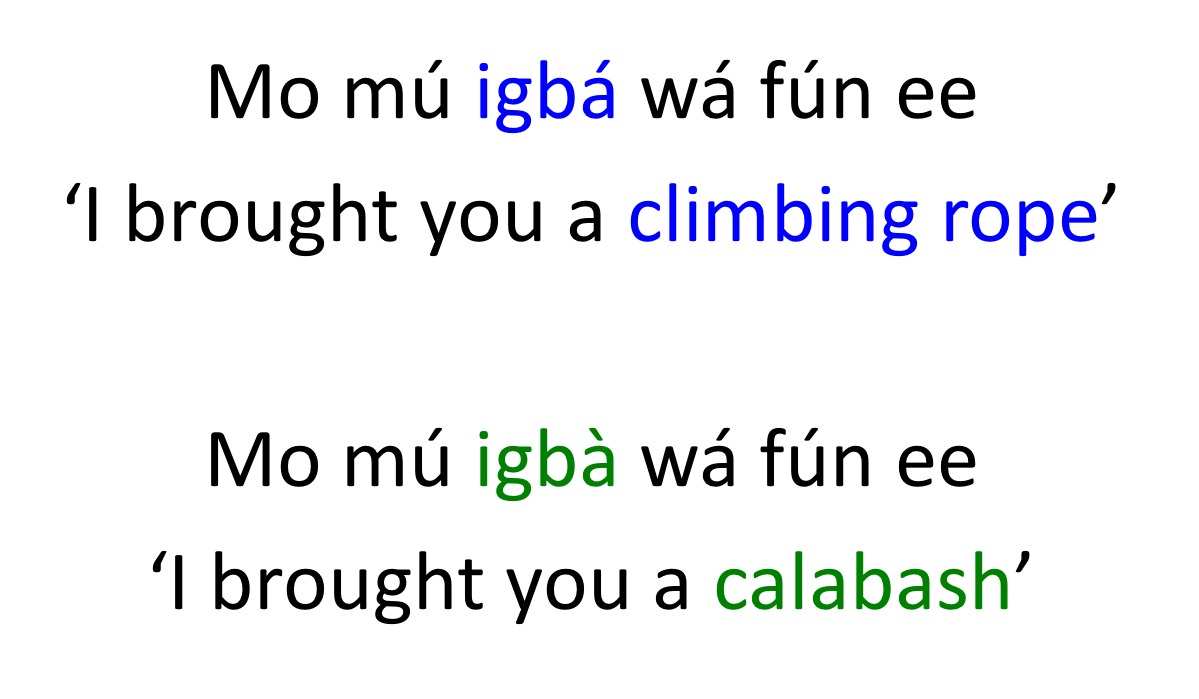

Many languages in Sub-Saharan Africa and creole languages within the Diaspora have unique tonal features. An example is the Yorùbá language of southwestern Nigeria which has three tone levels which provide lexical contrast (see image at right). ADEPt personnel have developed the first tone recognition applications for West African tone languages along with innovative ways to analyze text with diacritics. Transcriptions of our videos and audio are available as .srt files, compatible with ELAN Language Documentation Software, Adobe Premiere, YouTube's Creator Studio and other platforms.